During the Relativity Fest 2023 keynote, our chief product officer, Chris Brown, unveiled Relativity aiR for Review—Relativity’s first generative AI solution. After months of planning, building, and testing, we couldn’t be more excited to finally share it with all of you.

aiR for Review is designed to substantially accelerate litigation or investigative review by helping users locate data responsive to discovery requests and material related to different legal issues. The product will release with limited availability by the end of this year and is the first in a planned suite of aiR solutions that will use generative AI to empower our users with newfound efficiency and defensible results, at scale.

Shortly after the keynote, some of our Relativity AI experts presented a jam-packed session that outlined how Relativity is using generative AI to transform the review and investigation experience—with aiR for Review, as well as other ongoing experiments.

Level-Setting in a New AI Landscape

While legal professionals are eager to enter a new AI era, each person arrives with a different amount of expertise and understanding. Recognizing this, Aron Ahmadia, senior director of applied science at Relativity, aligned the audience with a generative AI overview. He started by highlighting Relativity’s AI Principles, stressing the importance of these commitments when exploring the new frontiers of AI technology. Then, after defining key terms (generative AI, ChatGPT, GPT-4—they’re all different! —among others) and dispelling some common myths (guess what? generative AI can be used for classification tasks like responsiveness), he emphasized the need to take measured, defensible approaches when building these solutions to establish confidence and trust.

“Generative AI is disruptive,” acknowledged Aron. “We are paying attention to the value it is going to create, but also the risks. For our first products, Relativity is taking a bottoms-up, document-level approach. You can review the AI’s work, validate it, and then apply it at scale.”

With this context established, Aron turned things over to Elise Tropiano, director of product at Relativity, to demonstrate how aiR for Review applies this approach to accelerate document review.

A Deep Dive into aiR for Review

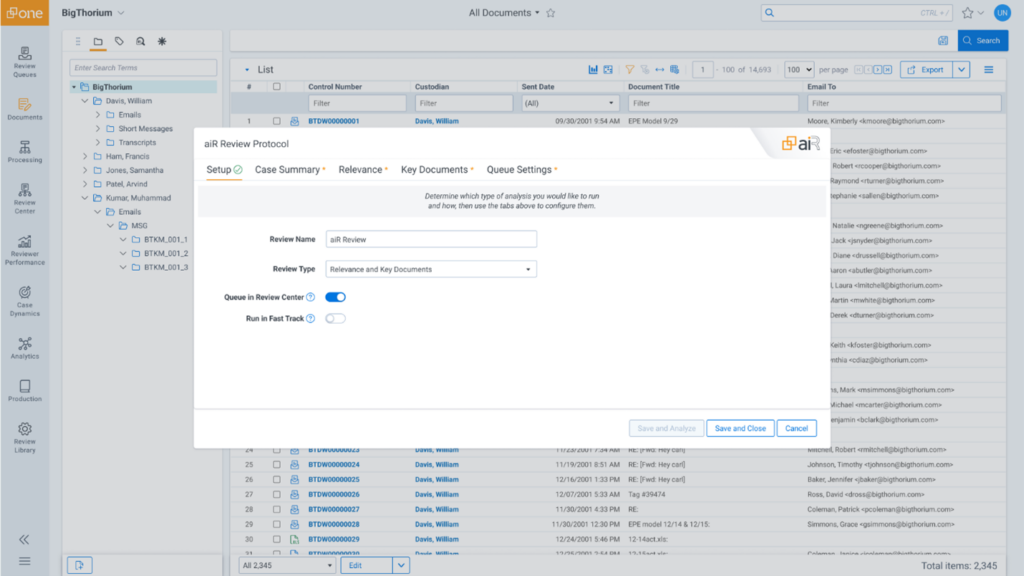

Building off the keynote presentation, Elise walked through aiR for Review’s workflows in greater detail. The solution targets three specific use cases:

- Responsiveness review: predicting documents responsive to a request for production

- Issues review: locating material relating to different legal issues

- Identification of key documents: finding key documents important to a case or investigation

To get these insights, a user enters specific background information on their matter through prescribed questions, runs the analysis, and then assesses aiR’s recommendations on the document list page. Users can easily view document citations and detailed reasoning that led aiR to make its prediction for a specific document.

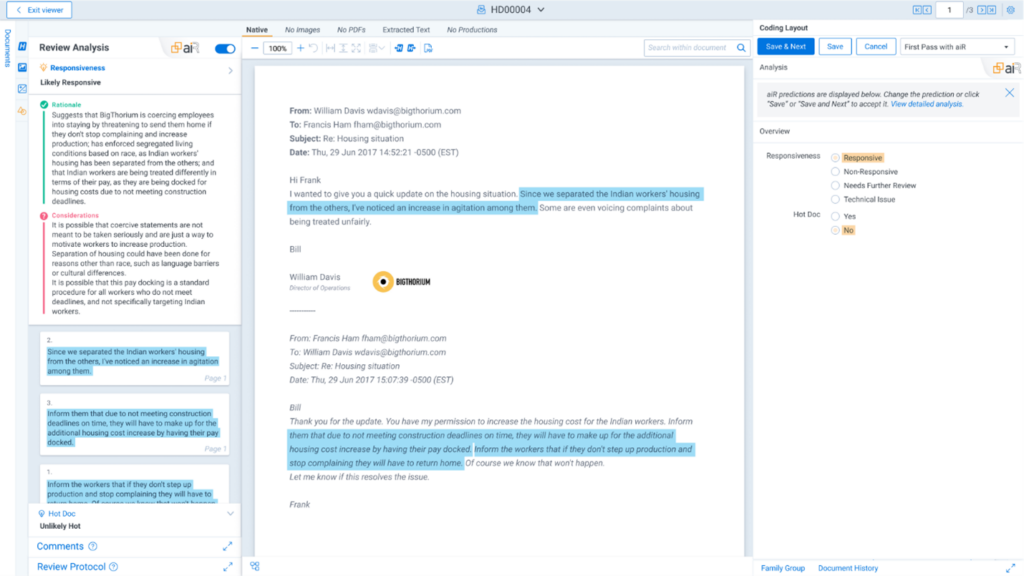

Elise then showed how these recommendations could be viewed directly in the context of a document, with the relevant citation highlighted, the option to integrate decisions into the coding panel, and commenting capabilities that seamlessly apply needed notes or adjustments to analysis inputs.

“aiR for Review makes review more efficient and consistent,” stated Elise. “In a statistically significant experiment that leveraged a review protocol, GPT-4 found 97 percent of relevant documents. The way that was achieved … has made its way into the aiR for Review experience.”

Custom Models for Complex Behaviors: Built For You, By You

Finding a “smoking gun” document is challenging, especially when it comes to cases and investigations that deal with emotional content or unethical behaviors. Ivan Alfaro, senior product manager at Relativity, acknowledges that in these matters, customers often need to find extremely specific content that indicates the presence of fraud, bullying, or discrimination.

“We see an opportunity to [use generative AI to] empower our customers to build their own customizable and reusable content detector models, in a matter of hours,” said Ivan. “The goal is for customers to be able to tailor these models to the unique needs of their cases, without having expertise in machine learning.”

Experiments to develop this new product are ongoing, but Ivan shared the current vision. Users would describe what they are looking for in their data in plain language. aiR would then generate a set of content examples meant to be representative of what the user wants to find. The user would then review this output to confirm which examples were relevant, and which were not, creating a feedback loop with the AI that tailors the model to the specific use case.

“The value here is that you can create a model to identify any kind of content,” Ivan shared. “That is a lot of flexibility and innovation at [a user’s] fingertips.”

Tackling the Nuance of Privilege Review

In the final portion of the session, Peter Haller, another director of product at Relativity, described Relativity’s experiments focused on accelerating and improving privilege review.

“Privilege review has unique challenges,” explained Peter. “Privilege review is nuanced, and privilege log creation is tedious. Context that doesn’t always live in the document you are reviewing is wildly important to making privilege calls.”

In 2024, Relativity will deliver Relativity Privilege Identify, a solution aimed to tackle these tough, context-based challenges with AI and reduce the risk, cost, and time of privilege review. In addition, Peter and his team are exploring how generative AI can help deliver better results, both on its own as well as in conjunction with the existing engine and capabilities that power Privilege Identify.

“There are two areas where we see potential improvements,” stated Haller. “One is reducing the noise … delivering higher precision around what is potentially privilege. The other is continuing to reduce the amount of human annotation and confirmation needed.”

The Discussion Continues …

Despite packing the 45-minute session with valuable information and multiple solution overviews, the speakers had a few minutes left to answer questions. Many involved the types of data that could be analyzed and the nuances between aiR for Review’s specific classifications.

But to close, one attendee asked: “Do you see a point where we get comfortable not having to check these predictions? Maybe, for example, looking at only 10 percent of them for quality control?”

“With any technology-assisted review process, at some point you’ll get comfortable with the predictions the model is making and then you don’t review everything,” answered Aron. “But where that is, that is going to be up to you, up to your clients, and dependent on the specific matter.”

With that, the team was officially out of time, but they generously agreed to stay behind and answer more questions. As guests left their seats, lines began to form, with attendees clearly eager to take advantage of the offer.

Want to learn more about how to transform review with generative AI? Register for our upcoming webinar and hear directly from customers who have spent the past few months piloting aiR for Review and accelerating their core workflows. You can also reach out to our team (or your account executive) to chat more in the meantime.

Sarah Green is a product marketing manager at Relativity, leading go-to-marketing strategies for the company’s newest innovations and partnering closely with cross-functional teams on product launches and adoption activities.