Litigation teams’ concerns about generative AI aren’t limited to hallucinations or the differences between human and machine reviews. Alarm bells are ringing at the rising challenge of deepfake evidence: AI-generated data that is fabricated to create “proof” of a lie and is extremely difficult to discern as artificial with the naked eye.

Popular headlines about deepfakes often focus on areas like defamation, intellectual property theft, and fraudulent impersonation. But the risk of deepfake evidence emerging in high-stakes litigation feels increasingly real, and legal experts are actively discussing it.

Claims of deepfake legal evidence are already surfacing in U.S. courts (see: United States v. Guy Wesley Reffitt, United States v. Anthony Williams, United States v. Joshua Christopher Doolin, and Sz Huang et al v. Tesla, Inc. et al). Concerns about deepfakes feel especially acute in this moment, when audio and video evidence are on the rise in litigation. (At Relativity, we ran the numbers and found a 40 percent year-over-year growth in audio files and a 130 percent growth in video files in RelativityOne.)

At Relativity Fest Chicago last year, a panel of experts spoke to a standing-room-only audience about these challenges and some potential solutions. will shift the perspective from the problem space toward a solution-based approach. Speakers for our “Deepfakes in e-Discovery” session included:

- Chris Haley, VP, Practice Empowerment aiR, Relativity (moderator)

- Jerry Bui, Founder & CEO, Right Forensics

- Mary Mack, CEO, EDRM

- Stephen Dooley, Director of Electronic Discovery and Litigation Support, Sullivan & Cromwell

Here are their thoughts on what modern legal and technology professionals can do to mitigate the risks posed by deepfake evidence in their matters, evolve their careers, and help preserve the integrity of our justice system.

The Nature and Prevalence of Deepfake Evidence

Jerry Bui, founder and CEO of Right Forensics, opened the panel with some definitions and background on deepfakes in a legal context.

“I like to think of deepfakes falling under the umbrella of synthetic—AI-generated—media. False media has been around forever, but it takes on a whole new meaning when you have synthetic media that’s created specifically to deceive or mislead,” he explained. “You can use synthetic media for good things, as I do, but there is a category of nefarious things, like creating an AI avatar of someone without their consent, which is often illegal.”

While multimedia data was once a rarity in litigation reviews, it’s become increasingly common in e-discovery exchanges in the last couple of years. This makes the threat of deepfake evidence feel particularly dangerous: if legal teams are new to reviewing any audio and video data, how vulnerable might they be to synthetic content?

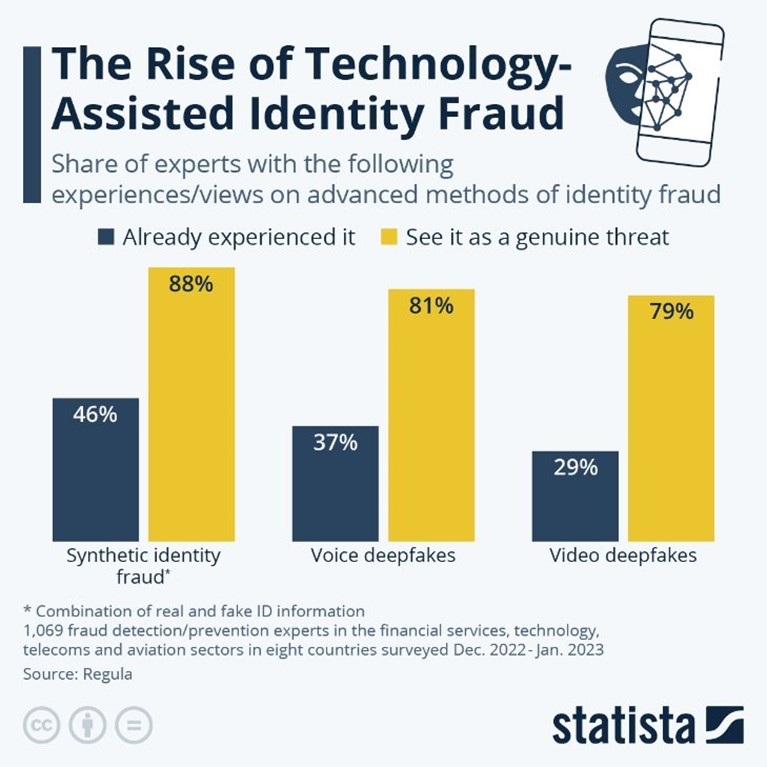

Beyond litigation, the rise of AI-enabled fraud at large adds to the weight of this threat.

“We’re seeing an increase in voice, video, and synthetic identity fraud overall. There is explosive growth in AI-powered fraud. There are 100 apps out there where you can convert your images into ultimate versions of yourself that look really good, and those are just the commercially available ones. The ones driven around national secrets and other uses—those are highly sophisticated, and generally not discernible by the human eye,” Stephen Dooley, a director at Sullivan & Cromwell said. “If my e-discovery hat came on: Where’s the metadata? How’s it stored? Is there something forensically we need to consider?”

It’s well known that e-discovery trends follow business trends with a bit of a long tail: the trickle-down effect of new applications and platforms inevitably land in scope of some matter or another down the road, and litigation teams must grapple with new data types accordingly.

“Before the Federal Rules of Civil Procedure changed to make it very clear that electronic evidence needed to be dealt with, a feeling in the legal community was that, ‘if you don’t ask for it, you don’t have to get it—we’ll wait until there’s case law.’ But in 2006, the rules exploded and electronic evidence was authenticated and brought into court. Over the years, the rules continued to change,” Mary Mack, from EDRM, explained to Fest attendees. “Judge Paul Grimm made it easier for us to get e-evidence in the court system and to authenticate it with a certification that the person or process was something that could be relied upon.”

The conversation has opened about rules changing again, with the prevalence of generative AI in mind.

“Fast forward to deepfakes, and now we have to assume we can’t believe our eyes or ears. And if you can’t believe your eyes or ears, loosely quoting Grossman, what are we going to do when we get to the arbiter of truth? Dr. Maura Grossman and Judge Paul Grimm (ret.) have a proposal to change the rules so that when we want to admit evidence, a different and more rigorous process is there for things deemed deepfake,” Mary said.

She continued: “Why go to a rule change all of a sudden? We’re not seeing written opinions on this. We’re seeing it more in news items, and they’re being dealt with in courts, but judicial opinions are not here yet. We’re a little early, but we want to be early—because this calls into question the whole justice system and people’s feelings about it and the work we do.”

“I think being earlier is good. If you’re seeing it out in the wild and in the criminal realm, it’s just a matter of time before it comes to civil cases,” Jerry added. “Interpol is seeing a lot of activity regarding authentication. The increasing complexity and frequency of these authentication methods are being defeated with deepfake tools.”

Data Authentication Tactics and Timelines

All of this means that there is no skeleton key for AI-generated data authentication. We likely aren’t waiting for a single tool that can reliably identify deepfakes from every source, forever and ever. More accurately, the “good guys” have to keep pace with the “bad guys”—matching their innovations and adaptations step for step.

Having reliable experts well-trained in this discipline will be as essential as the technology they’ll use to do it.

“As we try to authenticate evidence, theories and questions can be questioned. It’s so early that the testing is not here yet for many technologies. I see a very big need for people to be able to do this work and qualify as expert witnesses and give their considered opinion using various tools and techniques that can be used to determine if something is more or less likely to be deepfaked,” Mary said. “We don’t have this yet for deepfakes, but it is important.”

Jerry added: “What Grimm and Grossman did in the presentation to the advisory committee for rules is distinguish whether a jury can see evidence and make a determination for themselves, or if judges should be gatekeepers for this, or if they’re qualified for that. It speaks to the judge’s role here: if Grossman and Grimm have their way, it will be the judge who will be responsible for this gatekeeping.”

He noted that the existing rules around expert certifiers can get fuzzy with cloud technology as well as AI considerations. Moving toward more intentional standards in these regards will, Jerry argued, be important in the battle against deepfakes.

“FRCP 902(13) states that experts certify system or process; 902(14) states that experts certify documents themselves. As this was a standard process when we had hard disk forensics and created hash values, now that things are in the cloud we’re falling away from hashing and verification,” Jerry continued. “So I feel we’re losing some rigor and defensibility when it comes to verifying the completeness of captures from the cloud. FRCE 702 focuses on standards for expert witnesses. You can expect, once the testing goes into place and precedent is there around deepfake authentication, we’ll be challenged to meet that bar as expert witnesses.”

Mary explained that EDRM has met with Jerry, Gil Avriel, co-founder, CSO, and COO at Clarity, an AI detection provider, and others to really dig into the potential for e-discovery teams to evaluate for deepfakes at each stage of a matter.

“Looking at the EDRM model, where would deepfake detection be helpful? The first box we looked at was presentation; in court, you’ll want to know if evidence you’re submitting as an exhibit is a deepfake. But isn’t that kind of a little too late? So what about during ECA? Is that a place where we should be screening for deepfakes? When you’re trying to figure out whether to settle and if you have evidence to bring forward or defend a position, or impeach a witness, wouldn’t it be good to know this during, say, Identification?” Mary recalled for Fest attendees. “And in fact, why not filter at collection? Imagine if we can stack rank whether something has deepfake characteristics, is more or less likely to be deepfake, and then when you process to review. We already categorize things as hot docs; we probably need another category of ‘believability.’ And when you’re preparing a production, you want a QC mechanism like privilege checks; you don’t want to give deepfake evidence over.”

You get the idea, right?

“It’s not just one box, I don’t think. All the boxes on this EDRM model, with stakeholders potentially involved, can benefit from this knowledge. See our paper for details,” Mary advised, “but to sum up, you can find an expert witness to get information debunked or accepted in court. “In the middle of a project, with review, take a look at review teams to help steward this. And all the way on the left, forensics and IT people doing collections can help assess for deepfake potential.”

“One point I gravitated to was that this sets up requirements. These are the objectives we want to test out. But the biggest factor is: can it scale, and how quickly? Multimedia content can be gigabytes in size, and some cases involve massive amounts of that—which could be terabytes of data,” Stephen pointed out. “Do we filter more up front? Is there a transcription or translation component we can apply first? We need to figure this out—figure out if the bandwidth and expense are worthwhile. For each case, talk through burden and strategically align with clients to see how this is practically going to work.”

Jerry seconded the proportionality concern: “I had a case with 5 terabytes of data with potentially relevant multimedia on it. Do we suggest an expert is hired to authenticate every single piece? No. Begin by suggesting using tech as an automated scan to give you some sense of likelihood for deepfake generation inside of it, and move from there.”

Using Data Forensics to Combat Deepfakes

Fortunately, the experts are already waiting—and ready to adapt their forensics skills for this Brave New World of AI-generated chaos.

“Clarifying the role of a forensic expert: it’s not as if we’ll need Musk’s Neuralink to give us new eyes to confirm deepfake material. We can be fooled too. But if we’re brought a piece of media with high-ranking probability for deepfake, we can perform traditional digital forensics: check out a custodian’s computer for deepfake tools, look at their internet history for possible searches of deepfake sites, see if any metadata is missing or blank,” Jerry explained at Relativity Fest 2024. “For those doing multimedia forensics and forensic artifact trails, that’s what we’ll continue to do. We have the training to know where to look while understanding our purpose in the context of the litigation. We can search prompt history. Do they have an unaltered version of the media on their computer? It’s not about having a bionic eye to figure it out, but using traditional forensic approaches on a particular piece of evidence.”

A Fest attendee asked a question about an emerging solution to the deepfake problem: “Can you comment on the role of watermarking as a means of detection?”

Jerry responded to this one, saying: “There are several organizations providing us with watermarking capabilities, if not the actual generative AI platforms themselves. Content Authenticity Initiative has a C2PA protocol which defines the hashing of these materials—so not quite watermarking, but similar. This hashing is created by participants.”

It’s the participants who will make watermarking a viable, popular option for tracking AI-generated data, Jerry said.

“Creators of media, corporate entities, academics—these participants hash files and send them to the CAI server as a way to create a provenance trail/tracking trail, across multimedia handling systems, to see how information was received and how it moves through that system. The hope is that we’ll be able to see manipulation, with entry and exit points, every time it’s used, and track back to the media’s original creation to get information,” he explained.

Another audience member asked an insightful question about the potential to evolve one’s career to keep pace with deepfake and other AI issues: “If you want to change the trajectory of your career and become this kind of expert, what education would you recommend?”

“Creating the deepfakes!” Jerry said immediately.

“I know that Clarity has a red team creating deepfakes, trying to penetrate systems and trick the process,” Stephen added. “When you have that governance within an organization and are thinking like that, it helps you be as prepared as you can.”

Chris Haley, too, was excited about this question. He echoed Jerry, adding: “Definitely try it for yourself. This is a great thing about using ChatGPT and other tools; I love tinkering, so it’s fun for the right reasons.”

“There are times in tech where if you know just a little bit, you can jump right in and do the thing. It’s not all settled and institutionalized in a college course or certification program somewhere. Wherever you sit in the e-discovery ecosystem, look and see where deepfakes would be applicable to you and start charting your own course,” Mary advised. “I recommend Ralph Losey’s two–part blog on Grossman and Grimm’s work, which includes some of the proposal out in front of rules committee.”

And Jerry finished this engaging session by saying: “Jump in; the water’s warm! What’s fun about being a digital forensics examiner is you get a chance to think like a criminal without actually being a criminal.”